(but was afraid to ask)

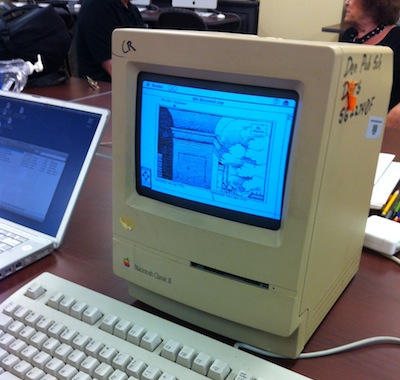

The first session I dropped into at the ELO Conference was the "Archiving Workshop". The eye candy here is Bill Bly's hypertext piece We Descend, running on the Mac Classic platform that he originally started writing it on. (That's System 6-point-something, I believe.) Enjoy the pixelly nostalgia!

The point, of course, is that getting data off of such antique equipment is a permanent and increasing headache. (This Mac was borrowed from a library, so Bly had the equal headache of trying to get the app onto it from his much newer Mac laptop.) The piece was originally written with a proprietary tool, Storyspace -- an old version which is no longer supported on current OSes. (He has since updated the project to the current, but still proprietary, Storyspace 2.)

The whole notion of archiving and replaying digital art (and games, etc) is rife with these issues. See, for example, the ELO's 2004 publication titled Acid-Free Bits.

The session turned into a lively roundtable discussion, and in the course of that, I popped in a mention of the IF Archive. I knew our community is pretty good about that stuff -- I've always been proud of it, and to be part of it -- but I was startled when some of the other participants vociferously lionized us for it. "The IF community is the gold standard for archiving!" Exact quote. Yikes!

(Apologies, by the way: I'm not going to even try to remember everybody's name. This post will be all "somebody" and "that guy". Feel free to comment and fill in blanks.)

The discussion alternately surprised and startled me with the different assumptions that people had about archiving. Like, for example, everybody thought this was a hard problem and the IF people had done something very difficult in solving it. "It's a distributed, mirrored archive containing every IF game ever created. When you put it that way, it does sound kind of impressive!"

Okay, that was Aaron Reed who said that. I know his name. But point taken.

Let me try to tease out some of those assumptions, what turns out to be easy, and what's probably more difficult than it looks. I will do this in the best way: with a set of Taoist aphorisms!

The sage does not try to solve all problems forever; the sage solves this problem now.

...Because that way, the next sage to come along will say "Hey! I've got a solution to the next problem!"

...And that sage will be smarter than you, because everyone will have learned from your experience.

...And that sage will be building on your work, not trying to replace it with a NEW all-problems-solved-forever plan.

...Also, your job will a hell of a lot cheaper this way.

Okay, that was pretty gnomic. And inconsistently third-person. I'd better go into more detail... a lot more detail. I will do this in the second-best way: with a series of context-free quotes!

(This will no doubt repeat a lot of the Acid-Free Bits territory. Much remains the same from 2004. However, I am coming at some of this from different angles.)

You can do that because your platform hasn't changed since 1983!

This is indeed a significant point about IF. I still write (some) games for the Z-machine, and the Z-machine has changed very little over time. The community's extreme conservatism about technology has made IF archiving easier.

However, don't confuse conservatism with stasis. In 1995, I uploaded Z-code files via FTP. Today, we have a HTTP upload form, and most games are packed in Blorb archives (with metadata and cover art). In 1996, Graham Nelson tweaked the Z-machine spec to double its memory. A few years later, I started developing Glulx, a 32-bit Z-machine replacement. All of these improvements have been absorbed into the community without much pain.

The value here is not rigidity, but a community commitment to (1) public standards; (2) open-source tools; (3) multiple implementations on multiple platforms; (4) backwards compatibility. (I should add something about "good layering hygiene", but that gets too technical for this post. Also, I'd have to look up how to spell "hygiene".) The result is that as platforms age and new ones appear, people are confident about porting and updating software, and are also confident that their old efforts will remain viable. This is true not just of IF games, but of other tools and resources -- disassemblers, metadata catalogs, the whole panoply.

There's another layer of conservatism which I should mention, though. We've stuck tightly over time to a stable concept of what an IF game is -- the interface model. That's an abstraction but it's important! The ELO archiving discussion struggled with the question of "what is it to archive?" Can you really re-live a 1981 arcade game via MAME? Does the original screen size matter, the trackball or the one-button mouse, the CRT fuzz, the slow loading times...? At what level do we recreate the experience?

In the IF world we've largely bypassed these questions, because we have a notion of IF in terms of abstract capabilities. Lines of text come in, a stream of text goes out. Recreating an IF work in a web browser or a teletype machine or a tablet or a Twitter stream is an interface change; it alters the experience but we still recognize it as the same work.

The larger world of hypertext and electronic literature doesn't have such a unity of abstraction. Those works are nearly all interface one-offs. So that's harder. I don't have good answers. For a good many years the desktop-computer interface ("windows, mouse, pointer") looked like the universal (albeit weak) abstraction, but now it's mobile and tablet time; that illusion is shattered. (The sight-impaired community would have told you it was crap all along. Their support and love of the IF world is no surprise, but it can be precisely framed in this model: IF's interface abstraction is high-level enough that a voice-synthesizer can cleanly support it.)

Of course, IF has begun to expand its bounds recently: we're getting menu models, keyword models, hybridizations with other game genres. This too is progress! But it will be uncomfortable progress in these respects. The old abstractions now... leak. Here, too, I have no good answers. We're making it up as we go.

It's worth noting Aaron Reed's comment about his work maybe make some change. It exists as an multimedia Web page, but also as a traditional IF game file.

To preserve the work past the future browser updates that will inevitably render it unplayable, this Glulx version attempts to capture the spirit of the original within the limitations of the more feature-limited, but much longer lasting, Glulx virtual machine.

Start by saving the files.

At one point of the discussion, a whole lot of eyes converged on me with the question: "How do you do it? What do you preserve?" Screenshots? Text dumps? Videos of people interacting with the work?

I gave what was (to me) the obvious answer: "First, save the files." But this was not an obvious answer to everybody! What files? What are files?

I have a feeling that the electronic-lit community is scarred by early experiences with arcane, 1990s-era hypertext systems. These systems were, by and large, proprietary and closed. If they're not dead, they've mutated in backwards-incompatible ways. (Storyspace is only one example; it's easy to find people who still mourn Hypercard.)

I think people have the sense that "the original work" here is aging 3.5" floppy disks -- the physical artifacts -- for which preservation is a fraught, technically difficult, imperfect process. "Even if we copy the floppies to CD," people said, "that doesn't solve the problem! In ten years CDs might be obsolete!" (True enough.) "We'd be right back where we started."

But this is not how I view data retention at all! In fact, I'll make the same point I did before: we have an abstraction. It's called "the file". More specifically, the binary or "raw" file. It's a fixed-length sequence of 8-bit bytes. Optionally, but usefully, you can attach metadata called "a filename" and "format description".

The era of computer networking, and ultimately the Internet, has cemented a really good set of conventions for dealing with files. We fling files around with abandon. You may not know what to do with a file, but if you don't have the file, you've got nothing. And if you do have the file, you can keep it forever. Not automatically -- you still have to make the effort to preserve the thing -- but there will be a way to preserve it, because files are our basic abstraction of data handling.

This assumption is ingrained to the point where I don't even think about it, but clearly it's worth picking out.

(It is not a coincidence that Jason Scott's entree in the archiving world was a web site called "textfiles.com".) (No, I don't intend to get into the difference between "text files" and "binary files" right now. :)

What does this mean for hypertext? On a modern computer, "save" or "back up" or "export" your work. If your stuff is on an antique Mac, make StuffIt or BinHex archives and get them off. If you can't do that, make a Mac disk image. Now it's a file and you can do something with it -- you can get it online. The technology is out there. Heck, last week a guy was showing me slides of the annual Apple 2 festival -- they can get files onto and off of Apple 2 machines from 1978. Jordan Mechner got his files online. You can too.

Now this leaves two problems. What do I do with this file? And how do I save it forever?

You don't have to know the answers right now. Maybe you wrote some work with an obscure software package which hasn't been seen since 1996. Your disk image is useless. But that's a research problem! Maybe a lost copy of the app will resurface. Maybe it will go open-source. Maybe somebody will hack the software. (All my IF work comes from a toolchain that began with the reverse-engineering of Infocom's proprietary engine.)

As for changing storage formats -- the answers will arrive, as long as you care to look for them. You aren't looking for a hundred-year solution! You just need a solution which is standard enough, and popular enough, that people will give you a migration path to the next solution.

(The value of a community archive, obviously, is that one team can commit to migrating the storage for everybody's work.)

All the files from my first 1993 Macintosh are preserved on my brand-new 2011 Macintosh. (Okay, well, actually they're off on a NetBSD server somewhere. But let's not spoil the lovely cyclical image.) They're wrapped up in onion-skin layers of compression and packaging, but I can get them out if I need them, because I've used well-known compression and packaging utilities. (Some of which now exist only as reverse-engineered tools. But they exist!) Some of those transfers went by 3.5" floppy, some by Ethernet cable, some by wifi, some by FTP or SSH. I don't know what the next one will be. Don't need to.

The IF Archive has never tried to get a grant.

Some of the academic people mentioned the difficulty of fitting electronic literature into a traditional university world. Is it hosted by the art department, the literature department, computer science...? What does long-term archiving mean if the department head flees for California, or the new Dean says "Stop bothering me with this nonsense"?

The IF Archive is hosted by the School of Computer Science at CMU. But this is in no way a CMU-sponsored project. Holy zog, I can't even imagine the paperwork.

It lives there because a friend of IF works for CMU -- doing IT support, mind you -- and he stuck the server box in his office. He asked the department "Hey, we're running this thing, it's got historical value, it doesn't take much bandwidth. Is that okay?" And the department said "Sure."

Is that a permanent solution? Of course not. In 1995 the Archive was at Gesellschaft für Mathematik und Datenverarbeitung mbH; some day it'll be somewhere else. The Archive is just a web server, and can easily be relocated to any reliable hosting service on the Net.

In one sense the problem is trivial -- web hosting is cheap. In another sense, the problem is of community-building, and that's hard. Why does the IF community keep uploading files to the same archive, decade after decade? Trust, faith, continuity. At one point in the workshop I said "You need a cabal." I was half-joking, but only half. The serious part is that you need some people who will still be around in ten years. The joke part is that the "cabal" is not self-declared, nor created ab initio: it's the people who have already been contributing for years, the names everybody knows because they (we) have been around.

Yeah, that last paragraph was pretty self-horn-tooting. Sorry about that. I think it's important to analyze these things, and I am sort of in the middle of this one.

I can't generalize, or even prove this solidly, but I'd say that decentralization has been an important part of keeping the IF community going. I will return to this point.

Make it HTML and Javascript.

Archiving source code is one thing; recreating interactive works is quite another.

In general, you need emulators, ports, or reimplementations. All of those take effort. I will here focus on just one aspect of the problem: what platform should you build your emulator, port, or implementation on?

Answer: HTML and Javascript. That's been the answer for at least five years now, and it shows no sign of losing traction. In the tech world there is no question of this. It's not just a universal computing platform, it's easier to use than any earlier platform. People have web browsers, and they'll click on a link where they would hesitate to download an app. We ported our IF interpreters to HTML/Javascript. MAME is being ported to Javascript.

The roundtable discussion ran into another worldview clash when I brought this up. "HTML isn't portable. Which browser do you target?" It's gotten a lot better, you know, folks. "Yeah, sure, they've been promising us that for years now!" Uh, well, I can't disagree.

But it has been getting better. Web sites, by and large, work for everybody. IE6 is dead and IE7 is (surprisingly) even deader. Big, highly-paid teams at Google/Microsoft/Apple are working their butts off on improving their web browsers, and "improved" does mean "more compatible". And when they fall down, there's jQuery. We on the fringes of the tech industry can only gain from riding those coattails.

There are of course gaps. Video and audio support for browsers are basically okay for standalone "here is a video or audio track" web pages, but they cannot yet be reliably integrated into an interactive multimedia experience. Which, yes, we want to do. But -- again -- progress is happening; the turbid mire of W3C working groups and tech-industry giants is scary (for zog's sake don't read about the video codec wars) but not hopeless.

Also, not all digital art needs video and audio. If you're looking at a hypertext piece from the really old days, with text and simple graphics, you have no technical obstacles at all. Get to it. (Nearly all IF falls into this category, obviously.) Simple animation? Javascript is probably good for that too.

If you need more graphical oomph, Java may suffice (perhaps via Processing). Java is going through some open-standard turbulence these days, so I can't recommend it whole-heartedly, but it does run on things.

Once again, I am not offering a universal or permanent solution. One day HTML and Javascript will be relics... but they will be well-supported relics. If the hackers of 20xx support one antique 2012 technology, it will be Javascript, because so much of the 2012 Internet ran on it.

(And if your work winds up on an Apple 2 emulator running on a Javascript emulator running on a quantum foobly-foo emulator running on a nudged quark -- hey, that's fine. You'd be surprised how much of today's technology looks like that, under the surface. Onion-skin all the way down.)

It's also worth noting that while Javascript is a good execution target platform, it's a mistake to archive only the Javascript version of a work. The Javascriptification process may obfuscate the source code -- that is, obscure traces of the author's original thought process. Always keep the original source code, project file, disk image, or whatever it is you were working from.

The IF Archive has no metadata.

("No metadata? What are you maaaaaad?" I know, I know. Stay with me.)

After the workshop, somebody came up to me in puzzlement and waved his browser tabs. "When you were talking about the Archive, did you mean ifarchive.org or ifdb.tads.org? They seem to be different sites."

True. And perhaps unnecessarily confusing. But there's a reason, and maybe even a good reason behind the reason.

When the Archive started up, as I said, it was an FTP server. It stored files with... well, with minimal metadata: a filename, a very simple hierarchical organization ("/if-archive/games/zcode/curses.z5"), and a small bibliographical note saying who wrote what and when. The note wasn't even formatted for machine-parsing. Not the Semantic Web, by a long shot.

Over time, we shifted to HTTP, and offered the bibliographic data on an HTML page. But everything else stayed the same. (The old path for that game still works!)

Could we have been more ambitious? Certainly. But continuity and laziness seemed like more attractive virtues to cultivate. This was, after all, a fringes-of-spare-time project for everybody involved.

People regularly requested better search features, but I never got around to implementing them. In 2006, Graham Nelson proposed a grand unified scheme for identifying IF and collecting metadata on it. Much of that scheme has been adopted, but again, I didn't get around to the part where the Archive would host queryable metadata.

This goes well past the virtue of laziness and into the culpable version. I apologize for that, if it makes any difference at this late date. For the purposes of this post, the point is that Michael J. Roberts got fed up with the situation. In 2007 he built the IF Database site.

This was unquestionably the best possible outcome for the community. Mike's IFDB is far beyond the Babel scheme's vision: it hosts bibliographic data, community ratings and reviews, tagging, user groups, and a much more powerful search facility than I could have built.

To be clear, there is no question of usurpation here. The Archive stores files; IFDB stores metadata, and links to the Archive. The two sites form a tidy symbiosis.

(Mind you, I really need to pester Mike for an IFDB database dump. No reason not to host that on the Archive, as a backup...)

I admit that ignoring features until your users build replacements isn't a great way to run a circus. But the Archive is run on a principle of "Do one clearly-defined thing" -- and I think that is a strength. Whoever was going to set up an Archive search service had a simple hierarchy of files to deal with; and the search service would have been an addition to the original service, not a replacement for it.

Along the same lines, we have an IF Wiki and an IF discussion forum. All four of these services are on different hosts, run by different people. They all exist harmoniously and without competition (pace a degree of redundancy between the wiki and IFDB).

I lay all this out because I think that our decentralized, cooperative model has served us better than any Universal IF Service. Individuals come and go (and, on the Internet, sometimes come and go and have flameouts and flounce-outs and schisms, all in the same month). We've had an excellent record of stability over the years, but there are no guarantees. If something or somebody goes blooey, better that only a fraction of our online activity is interrupted.

This model is also more friendly to evolutionary changes. It wasn't too many years ago that IF discussion was centered in Usenet (the rec.arts.int-fiction and rec.games.int-fiction newsgroups). The web forum was founded by Mike Snyder as an alternative, to the disgruntlement of many Usenet regulars. (Very much including myself!) But over time, the center of gravity has shifted. I won't try to analyze the reasons here, but the result is undeniable. Today, the old Usenet groups loll in benign neglect, attracting a handful of posts per month. The web forum is where we're at.

Note that Mike Snyder was not empowered to do this job by a committee (much less a cabal). He set up a web site, installed phpBB, and posted (on Usenet!) saying "Here it is." The same was true of Mike Roberts and his IFDB scheme. This, too, is a community virtue -- encouraged both directly by our members, and indirectly by our "do one clearly-defined thing" value. By not over-reaching, we leave room for our friends to -- well, to reach.

(And yes, I may yet set up an Archive metadata service, as specified in the 2006 agreement. At the moment, there doesn't seem to be a strong need. But if I did it, I'd import the data from IFDB.)

And who is going to do all this work, smarty-pants?

I don't have an answer to that. Smarty-pants.

Setting up a wiki or a discussion forum is trivial -- any reputable web-hosting service will let you register a domain and do the setup inside of twenty minutes. Finding somebody to moderate the thing, clean spam, and keep the software updated so that it doesn't turn into a sea of off-brand Chinese handbags -- that's more work.

Getting authors to upload disk images or backups of their work is a pain in the butt, because it involves hassling people.

Translating interactive works into Javascript for posterity is a lot of work. No getting around that. One of the suggestions that arose was to teach a class on "digital archiving and preservation", take your gang of hack-happy undergrads, and turn them loose on classic works. Of course, the list of works one might want to archive (even the subset for which you've got permission from the author) will dwarf any conceivable workforce one could assemble. If you come up with an alternative miracle, let me know.

And in conclusion...

Back to the aphorisms, I guess. Pick a task. Do it. Don't get lost in grandiose plans. The perfect is the enemy of the okay-for-now. Success is not measured by how it looks on day 1, but by whether you've kept it regularly updated and massaged and tidied on day 366. (Not to mention day 3653, but again, skip the grandiose plans to begin with.)

Live. Live. Live.